|

|

|

Demonstrations

Ubicomp 2003 Adjunct Proceedings (PDF 52MB).

|

Detection of Safety Hazards Using Cooperating Chemical Containers

Martin Strohbach

Lancaster University

|

|

Many ubiquitous computing systems and applications rely on knowledge about activity and changes of a physical environment. How systems acquire,

maintain and react to models of their changing environment has become one of the cenral research challenges in the field. This demonstrator presents a

novel concept and technology that is designed to be used for applications in which essential aspects of the world are modelled by a collection of

Cooperative Artefacts. Cooperative Artefacts are physical artefacts that incorporate embedded domain knowledge, perceptual intelligence and

cooperative, rule-based reasoning. Our demonstrator shows how the technology can be used to detect safety hazards that arise from improper storage of

chemical containers as they can occur in chemical processing plants. We instrument real chemical containers with Particle Smart-its and ultrasound

transducer. Thus the containers can detect hazards by exchanging knowledge about containers in their proximity. As it is prohibitive in these

environments our technology is independent of any external infrastructure.

|

|

MobiTip: Using Bluetooth as mediator of social context

Åsa Rudström, Martin Svensson, Rickard Cöster, and Kristina Höök

SICS

|

|

MobiTip is a social mobile service where comments or tips given by one person are made available to another when

users pass each other and their devices connect, when they approach connection hotspots, or on demand. Bluetooth

connectivity is used to form a social space of nearby devices that is used as key input for the collaborative filtering

of tips. The social space is visualized to show other users nearby, and thereby illustrating where tips come from

and why they come at some particular point in time.

|

|

Practical considerations using eSeals

Albert Krohn, Michael Beigl, Christian Decker, Philip Robinson and Tobias Zimmer

Teco, University of Karlsruhe

|

|

While in the past waxed-seals where used to ensure the integrity, electronic devices are now able to take over this functionality and provide better, finer grained, more automated and more secure supervision. This demo shows a prototype eSeal system, with a computational device at its core that can be attached to a good and can be configured automatically using active tags. The eSeal monitors and seals different states sensed from the objects physical conditions. The eSeal is designed and implemented with flexibility in mind, allowing configurable integrity control settings. The system works with minimal infrastructure requirements and is functionally self-contained, such that goods can be supervised that are only accessible in certain locations. A major output of the eSeal prototype system demo is the support for intuitive user interaction, with regards to configuration and handling of the devices in the activity chain

|

|

Seamful Games

Matthew Chalmers, Marek Bell, Barry Brown, Malcolm Hall, Scott Sherwood, Paul Tennent

University of Glasgow

|

|

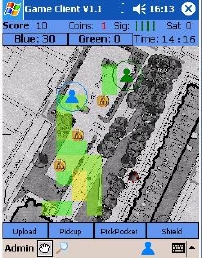

Seamful games are mobile multiplayer games, designed to let people take advantage of the limits and gaps of ubicomp infrastructure e.g. wireless networks and positioning systems. In this demo, two teams of players use PDAs with GPS and 802.11, to gain information from a server about the position of periodically appearing ‘coins’, the locations of other players, and wi-fi signal strength as sampled by players during the game. To gain points, a player has to get close to a coin (according to GPS), and use a GUI 'Pickup' command to pick it up. Then, the player can 'Upload' the coins he or she is carrying, and get a point for each coin. If two players in the same team upload coins to the same access point at the same time, each coin is worth double points.

The game has an inbuilt tension between being in net coverage and being out. Initially, players are uncertain as to where there is coverage, but they can watch and talk to other players as they move, and use the dynamically updated 802.11 map overlay as they discover new access points and reveal more of the coverage to each other. Coins often appear in areas where there is no coverage, but one needs net coverage in order to upload coins and get game points. When one is in coverage, one can also get updates on players’ positions, new coins and net coverage, and one can use the 'Pickpocket' command. This steals coins out of the PDAs of any nearby players, so... watch out!

|

|

eXspot: A Wireless RFID Transceiver for Recording and Extending Museum Visits

Sherry Hsi

The Exploratorium / University of Washington / Intel Research Seattle

|

|

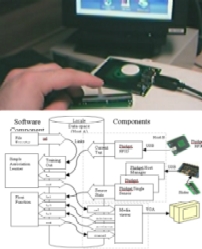

The eXspot is a museum-based application that leverages RFID technologies to support, record, and extend exhibit-based, informal science learning at the Exploratorium—an interactive hands-on museum of art, science and perception. Science museum visitors are often pressed to attend scheduled events and to see as many exhibits as possible in a single visit, leaving little time to experiment, learn and reflect upon deeper ideas and science concepts behind an exhibit. The eXspot project addresses this problem by allowing users to “tag” exhibits that interest them during their visits and learn more about them online after their visits.

The eXspot application consists of low-power RFID “eXspot” transceivers (pictured above) placed throughout the museum and wirelessly connected to a logging server. Visitors receive an eXspot ID card upon their arrival at the museum, and can place their ID tags on any of the eXspots to “tag” that exhibit. Their ID number and any other information collected at the exhibit is saved on the eXspot server. Some exhibits are also wired with cameras to take pictures of visitors as keepsakes. When visitors return home, they log in to the eXspot Web pages to learn more about the science behind the exhibits they visited and even see pictures of their families at those exhibits. The eXspot demo will show a typical exhibit and how a visitor would interact with it to extend his/her museum experience.

|

|

Supporting Collaborative Scheduling with Interactive Pushpins and Networking Surfaces

Fredrik Helin, Theresia Höglund, Robert Zackaroff, Maria Håkansson, Sara Ljungblad &

Lars Erik Holmquist

Viktoria Institute

|

|

We have developed a prototype to explore support for tangible and collaborative scheduling. The prototype is based on the Pin&Play technology and has been developed with a film festival team that conducts collaborative scheduling on large surfaces. The prototype shows how the festival team could retain the advantages of the current practice of large pin boards and paper notes, while gaining many additional functions only possible through digital augmentation.

|

|

tunA: Synchronised Music-Sharing on Handheld Devices

Arianna Bassoli

Media Lab Europe

|

|

tunA is a mobile peer-to-peer application that allows users to share their music locally with others who happen to be in their physical proximity. Playback is synchronized on all tunA devices that are 'tuned into' the same source. These handheld devices are connected via ad-hoc 802.11 wireless networks formed as they come into and out of range with each other. With tunA, music constitutes the primary focus around which new social connections can be made and existing ones maintained. The localised dimension of the application aims to enable the creation of links between the virtual world of digital communication and file exchange and the physical reality in which users are immersed every day.

|

|

Malleable Mobile Music

Atau Tanaka

Sony CSL Paris

|

|

Malleable Mobile Music is a system for collaborative musical creation on mobile wireless networks. It extends upon music listening from a passive act to a proactive, participative activity. Social dynamic and mobility are inputs to an audio re-composition engine enabling communities of listeners to experience familiar music in new ways. The system consists of mobile terminals, sensor subsystems, geographic localization simulator, and dynamic stream server. It serves as a platform for experiments on studying the sense of agency in collaborative creative process, and requirements for fostering musical satisfaction in remote collaboration. A community of listeners chooses to listen to a single piece of adaptive music. No longer a fixed entertainment medium, the music becomes a malleable content form fostering shared experience.

|

|

Sharing Memories: “The UbiComp Scrapbook”

Aaron J. Quigley, David West

University of Sydney

|

|

This demonstration presents our Virtual Personal Server Space (VPSS) to support applications for enhancing the sense of purpose and social wellbeing of the elderly, by promoting reminiscence and communication

activities. We present our digital scrapbook application for memory sharing, and multimodal email application including our novel pen and paper based 'drawable' user interface. Over the course of the Ubicomp 2004 conference, this demonstration will produce a physical scrapbook with a corresponding online digital scrapbook augmented with images and sounds from our multi-modal, multi-device ubiquitous computing system.

|

|

Using Camera-Phones to Enhance Human–Computer Interaction

Anil Madhavapeddy, David Scott, Richard Sharp, Eben Upton

University of Cambridge / Intel Research Cambridge

|

|

The proliferation of camera-phone technology provides a global opportunity for novel ubiquitous computing applications

that is, as yet, largely unexploited. Our work demonstrates some of the untapped potential of this

existing infrastructure, focusing on the use of deployed camera-phones as devices to enhance human-computer

interaction.

We show that, without requiring additional hardware, an existing camera phone can be used as both a sophisticated

pointing-device, facilitating interaction with active displays; and a user interface for devices without

displays or input capability of their own. Our implementation relies on visual tags, which can be detected

and decoded by camera-phones, and short-range wireless communication between camera-phones and nearby

computers (e.g. Bluetooth).

|

|

Sculpting Home Atmospheres

Philip Ross1 and David Keyson2

1Technische Universiteit Eindhoven, 2Delft University of Technology

|

|

Ambient technology is entering the home environment, the place we play, relax, argue, entertain, sulk, etc. Although the technology disappears into the background, physical interfaces between humans and systems remain necessary. A key design challenge is how to design these interfaces to fit in the everyday context. This demonstration shows the results of a case study, in which a living room atmosphere projection system and its rich interactive interface, called the Carrousel, were designed and built.

The Carrousel offers expressive physical interaction, incorporating playfulness, aesthetics and creativity. Its design is based on expressiveness of form, material and movement. It resembles an abstract sculpture and allows a person to dynamically ‘sculpt’ a desired atmosphere. The expressions made on the Carrousel are projected on the living room space through interplay of coloured lights, audio and animated wallpaper.

|

|

bYOB (Build Your Own Bag):A computationally-enhanced modular textile system

Gauri Nanda

MIT

|

|

We present bYOB (Build Your Own Bag), a flexible, computationally-enhanced modular textile system from which to construct smart fabric objects. bYOB was motivated by a desire to transform everyday surfaces into ambient displays for information and to make building with fabric as easy as playing with Lego blocks. In the realm of personal architecture, bYOB is an interactive material that encourages users to explore and experiment by creating new objects to seamlessly integrate into their lives. The physical configuration of the object mediates its computational behavior. Therefore, an object built out of the system of modular elements understands its geometry and responds appropriately without any end-user programming. Our current prototype is a bag built out of the system that understands it is a bag when the handle is attached to the mesh of modules and responds with sound and light to illuminate inner contents when it is dark, to alert users when personal items are missing, and to communicate information downloaded from the Internet.

|

|

KU: iyashikei-net

Urico Fujii and Ann Poochareon

Interactive Telecommunication Program, New York University

|

|

KU: iyashikei-net is an interactive networked sculpture installation that allows people to communicate through the interface of tears, a physical output of human emotional expression that has not been made exchangeable with current communication devices.

Tears are output of high state of human emotion. Often when a person is extremely emotional, one cannot speak, write, nor sing. In such cases, tears are the only source of emotional expression. Unfortunately, when people communicate over digital network, tears are not seen, nor felt by the person on the other side of the digital networked devices, e.g. cellular phones, emails, or instant message. The goal of the project is to make transportation of tears in networked communication possible, to remind people that natural physical output of human expression such as tears are essential key to communication, which may result in better understanding of one another.

|

|

iBand: a wearable device for handshake-augmented interpersonal information exchange

Marije Kanis1, Niall Winters2, Stefan Agamanolis1, Cian Cullinan1, Anna Gavin1

1Human Connectedness Group, Media Lab Europe, 2London Knowledge Lab, Institute of Education, University of London

|

|

iBand is a technology-enhanced bracelet that can store, display, and exchange information about you and your relationships. This exchange occurs during a common user-initiated one-to-one gestural

interaction between two people: a handshake. iBand aims to leverage the familiar nature of the handshake, coupled with the qualities of jewelry

to act as tangible keepsakes and reminders of relationships, to explore potential applications at the intersection of social networking and

ubiquitous computing.

|

|

MouseField: A Simple and Versatile Input Device for Ubiquitous Computing

Toshiyuki Masui1, Koji Tsukada2, Itiro Siio3

1AIST, 2Keio University, 3Tamagawa University

|

|

A MouseField is a robust and versatile input device that can be used at almost any place for controlling information appliances.

A MouseField consists of an ID recognizer and motion sensors that can detect an object and its movement after

the object is placed on it. By placing an object with an RFID on a MouseField and sliding or rotating the object, a user can control various systems just like he can use a standard mouse for

controlling standard PCs.

|

|

u-Photo Tools: Photo-based Application Framework for Controlling Networked Appliances and Sensors

Genta Suzuki, Daisuke Maruyama, Takuya Koda, Shun Aoki, Takeshi Iwamoto,

Kazunori Takashio, and Hideyuki Tokuda

Keio University

|

|

u-Photo is a digital photo image which includes information about networked appliances and sensors in ubiquitous computing environment.

u-Photo Tools provide the method for generating u-Photos and the method of viewing u-Photos. Users can easily lookup information about networked devices using 'taking a photo' metaphor provided by u-Photo Tools. Moreover, users can intuitively view the information and control the device using photo-based GUI.

The key of this research is our novel media, u-Photo. u-Photo records the status of the service(e.g. status of playing video or sensor information), and we can easily share devices by sharing u-Photo.

|

|

GLOWBITS

Daniel Hirschmann

Interactive Telecommunication Program, New York University

|

|

The Glowbit System is an attempt to explore the potentials offered by an actuated tactile interface. It offers multiple levels of interaction to augment the user experience. The Glowbits change color like the pixels in any display system and simultaneously physically move to different locations along a linear track. Their position and color are related: as images shift, so too does the topology of the Glowbits surface.

|

|

Ringing the Doorbell—Configuring Sensor Networks

Bernard Horan, David Anderson, James “Bo” Begole, Gabriel Montenegro,

Stéphane Perret, Randall Smith, Robert Tow, Bruno Zoppis

Sun Microsystems Laboratories, Europe

|

|

Much work on Wireless Sensor Networks (WSNs) is focused on the functionality afforded by the devices and the means by which they communicate. This demonstration focuses on the programming model and deployment mechanisms for a WSN. We present a visual environment in which the services provided by sensors can be assembled and configured to fulfil the needs of an example scenario: a visitor ringing the doorbell of someone’s home.

|

|

Context-Based Seamless Network and Application Control

Masugi Inoue, Mikio Hasegawa, Nobuo Ryoki, Hiroyuki Morikawa

National Institute of Information and Communications Technology (NICT)

|

|

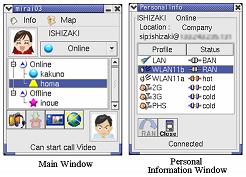

A context-based adaptive communication system is introduced for use in heterogeneous networks. The context includes user’s presence and profile such as location, available network

interfaces, network availability, network priority, terminal features, and installed applications. The system operates on a seamless networking platform we developed for heterogeneous

networks. By using contexts, the system, in advance of communication, shows a caller and its callee applications that are available through the network they can get access to. Changes of the

contexts can switch the on-going application to another during communication. These features provide us unprecedented styles of communications.

|

|

ECT: A Toolkit to Support Rapid Construction of Ubicomp Environments

Chris Greenhalgh, Shahram Izadi, James Mathrick, Jan Humble, Ian Taylor

University of Nottingham

|

|

We present the initial release of the Equip Component Toolkit (ECT), a freely available software toolkit for ubiquitous computing that can reduce the cost especially in time and effort of developing, deploying and managing ubicomp applications and environments, and increases the potential involvement of designers and users in this process. This first release is targetted in particular at 'installation'-type systems, such as 'augmented' homes, museum exhibits, classrooms and lab spaces. Our approach emphasizes support for legacy and non-toolkit components (such as ordinary JavaBeans), rather than requiring coding specifically for the toolkit. We will be demonstrating the toolkit's approach to rapid interactive development of small installations using various supported hardware and software components including SmartITs, Phidgets, and Bloggers. The toolkit is publicly available and we encourage others to use it and contribute components, interfaces and other extensions: see http://www.equator.ac.uk/technology/ect .

|

|

Multi-Hop on Table-Top: A Scalable Evaluation Workbench for Wireless Ad-Hoc/Sensor Network Systems

Kazuhiro Takeda, Koji Shuto, Kiyoto Tani, Yu Enokibori, Gaute Lambertsen and Nobuhiko Nishio

Ritsumeikan University / Japan Science and Technology Agency

|

|

We will demonstrate an experimental environment for ad-hoc/sensor network systems, where complete network experiments can be conducted on top of a table. In our system, each node retrieves the radio signal strength of the neighbor nodes, and uses a threshold value to determine whether communication can be accomplished or not, thereby controlling the connectivity, and allowing for spatial/temporal scalability of the network. In addition, the system can perform sensor network independent of any application type; supporting a sensor emulator to be added to each node, an event emulation tool to be used as events for the sensors, and a query dispatcher to inject a query into the network.

|

|

A Demonstration of Capture/Playback: A Strategy for Prototyping Applications in the Physical World

Steven Dow, Blair MacIntyre, Maribeth Gandy, and Jay David Bolter

Georgia Institute of Technology

|

|

Designers of digital applications that deal with complicated infrastructures in the physical world often deal with the formidable challenges of working in a specific place. During development and testing, the designer must constantly move around the physical space to debug or test new interactions. In outdoor settings, factors such as weather, poor work ergonomics, and the lack of power and networking are

serious impediments for designers. Our strategy is to use a flexible capture/playback infrastructure, which is tightly integrated into a design environment for prototyping physical applications. We are able to capture

the sensor data necessary to design and build applications, which can be easily deployed to the real environment. We will demonstrate this approach within the context of DART (the Designer's Augmented Reality Toolkit) by

showing how augmented reality applications (such as mocking up the placement of information displays) can be quickly prototyped and tested using captured data sets. The capture/playback method breaks the requirement that sensors be used synchronously, in realtime, in the actual location and allows the designer to design around specific usage

'scenarios'. The flexible programming environment of Director used in DART will allow us to demonstrate this design process to conference attendees.

|

|

Audio Location: Accurate Low-Cost Location Sensing

James Scott

Intel Research Cambridge

|

|

Audio Location is a very low-cost fine-grained location sensing mechanism. It is based on the use of cheap commodity microphones attached to cheap PCI sound cards in a standard PC. In the initial prototype, the system can detect human-made sounds such as the clicking of fingers or clapping to accuracies of around 15cm for a 3D location. This is useful as a fine-grained location sensing mechanism, and is used in the demonstration to control a music player application by using finger-clicks on virtual 'buttons' in 3D space without the need for any physical interface.

|

|

Fast, Detailed Inference of Diverse Daily Human Activities

Matthai Philipose

Intel Research Seattle

|

|

The ability to detect what humans are doing has long been a key part of the ubiquitous computing agenda. We demonstrate a system that can detect many day-to-day human activities in considerable detail. The system has three novel components: sensors based on Radio Frequency Identification (RFID) tags, a library of roughly 38,000 human activity models mined from the web, and a fast, approximate inference engine. We show how the inferences derived can be used to drive two applications from the eldercare space. The Caregiver’s Assistant, targeted at the eldercare professional, automatically fills out entries from a state-mandated Activities of Daily Living form. The CareNet Display, targeted at family caregivers, provides up-to-date information about the elder’s activities.

|

|

MITes: Wireless Portable Sensors for Studying Behavior

Emmanuel Munguia Tapia, Natalia Marmasse, Stephen S. Intille, Kent Larson

MIT

|

|

In order to study human activities and behavior in natural settings such as the home, a portable sensing infrastructure

that can be easily retrofitted in existing homes and that can cope with the complexity of everyday life is required.

We have created MITes: MIT Environmental Sensors a portable kit of ubiquitous wireless sensing devices for realtime

data collection of human activities in natural settings. The sensors designed to be used in two ways: (1)

determining people's interaction with objects in the environment, and (2) measuring acceleration on different

parts of the body. The sensors have been designed to permit low-cost research studies where data is acquired

simultaneously from hundreds of objects in an environment and multiple parts of the body.

|

|

|

|

|

|

|

|